Artifical Intelligence in Information Security

The term artificial intelligence (AI) has now become established, even if the existing models are still a long way from general artificial intelligence (AGI). Models such as ChatGPT or Claude are and have been used, for example, to write phishing emails and tools such as Copilot can be used to program malicious code. At the same time, practically all information security software, from firewalls to endpoint security, is advertised as having integrated AI. Artificial intelligence therefore has a direct impact on information security.

AI in offensive IT security

Artificial intelligence in offensive IT security, i.e. used by attackers, is in most cases still limited to social engineering, i.e. text written by a large language model (LLM), which is generated grammatically correctly, especially in foreign languages.

Social Engineering

However, the ability of generative AI systems to analyze and reproduce individual communication styles is now also leading to convincing so-called deepfake attacks with video and sound, although the effort required for convincing fakes is still quite high in some cases, or simpler voice-swapping attacks in which existing video material is only provided with new sound. At the same time, the tools available are rapidly improving and becoming cheaper.

Another strength of generative AIs and LLMs is the ability to guess complete passphrases from only partially available information, i.e. to weaken the security of long passwords and password sets in particular.

Penetration Tests

Automated systems are also able to search systems efficiently for vulnerabilities, especially through machine learning (ML), an advanced type of fuzzing with automatic adaptation and can now also generate exploits for vulnerabilities found. Other systems such as DeepExploit learn the use of Metasploit exploits independently through reinforced learning and can carry out penetration tests, including report generation, almost completely automatically.

Particularly in the area of automated penetration tests in conjunction with various machine learning methods and large test models that can be provided by online hacking platforms, for example, significant progress can be expected in the near future.

AI in defensive IT security

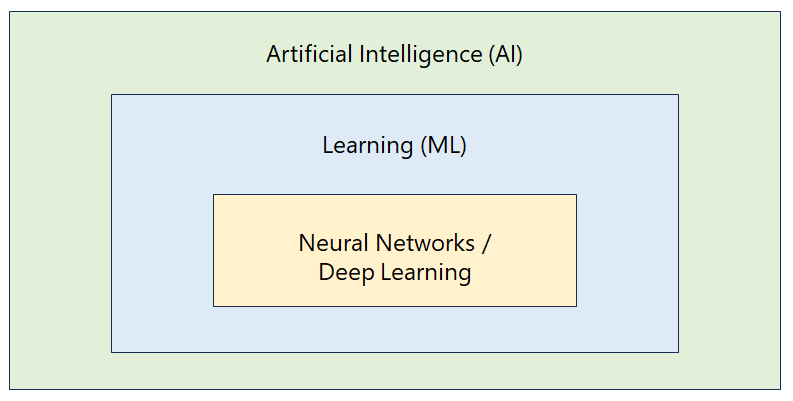

Virtually all information security systems today are advertised with AI or AI for marketing reasons. In practice, however, these are almost always methods based on big data and machine learning that are used for pattern recognition and not generative or even general artificial intelligence.

Technical Information Security

Regarding firewalls and intrusion detection systems, an AI can detect anomalies in data traffic at an early stage and react faster than a human administrator. Endpoint security systems can identify deviating usage behavior and thus distinguish legitimate users from attackers who have hijacked access data and gained access to the system.

In a Security Operations Center (SOC), AI is used in particular to make a pre-selection for the SOC analyst in order to make optimal use of the human analyst's time. AI systems are particularly useful for risk analysis and triage and can significantly speed up response times.

However, a fundamental risk of the machine learning methods used is that these methods require an alpha-stable distribution for reliable detection. However, this probability distribution is no longer reliable in the case of an adaptive attacker that can adjust its behavior.

Compelling Honeypots

Since generative AI systems generate new information on the fly but can also realistically reproduce stored information, LLMs are well suited for highly interactive honeypots, for example, which can fool the attacker into believing that the system is real. In particular, an LLM can also create genuine-looking but false confidential documents or email folders for the attacker. Even genuine data already copied by the attacker can lose value if the attacker can no longer distinguish between genuine and false data.

AI as a Possible Security Risk

However, systems with artificial intelligence are not only used offensively and defensively, but can also pose a potential security risk in themselves.

Hallucinations of LLM

Large Language Models such as ChatGPT from OpenAI, Claude from Anthropic or Gemini from Google are primarily generative AI systems that use a probability method to determine which sequence of words or which collection of pixels could best match a query. LLMs are therefore very good at finding approximately correct or at least plausible answers to vague questions, or at structuring and summarizing unstructured data approximately correctly.

However, a fundamental problem with all LLMs is that the AI lacks any understanding of the output data. In particular, there is no check for logic or correctness. Errors in information that is only approximately correct or only plausible but actually incorrect are not recognized. This lack of understanding of what is correct or true also means that all LLMs have a certain tendency to "hallucinate" non-existent content, i.e. to invent seemingly genuine-sounding content and present it as fact.

Employees who use LLMs must therefore be specifically informed about the possible errors of the model and the risks involved in using the generated answers. In particular, the correct and intelligent selection of prompts is extremely important and must be learned.

Data Leakage Through Learned Data

Learned data is transferred to the LLM's probability model by training the AI. However, targeted queries to the LLM can result in confidential or personal data used in the training being reproduced verbatim. This behavior is known as "prompt leakage" and can be used for attacks.

Various companies have therefore restricted the use of generative AI systems or prohibit their use with confidential or personal data.

Risks Due to Limited Resources

Technically speaking, an LLM is a so-called transformer that transforms the learned input, i.e. the training data, into a new output. For cost reasons, the operator of the model defines a calculation budget, i.e. how much computing time may be used and to what depth of calculation may be performed before a new token, e.g. a new output word, is generated. With a lower calculation budget, the LLM generates simpler answers or cannot answer complex prompts correctly at all; with a higher budget, very complex requests can also be processed.

Our Service

We support you with the safe introduction of generative AI systems in your organization. We assess your AI systems for potential risks and provide you with recommendations for secure use.

We show you how you can use artificial intelligence both offensively and defensively to improve information security.